Critical Future Tech

Issue #10 - August 2021

Welcome to August and, with it, some announcements.

After 10 months running the project with the format

Tech Headlines + Worth Checking + Interview, new editions

(including this one) won't feature the Tech Headlines anymore due to

the crazy amount of time required to prepare that section.

The focus will be kept on the interviews and the

Worth Checking material. To those who enjoyed reading about

tech news from a critical angle, you can do so on CFT's sister-site

bigtech.watch.

Dr. Kira Allmann, Public Engagement Researcher at the Ada Lovelace Institute, joins us for this tenth edition to discuss the digital divide, explain why it's more than simply not having internet access and the role of Critical Tech Literacy when building future technology.

- Lawrence

Conversation with

Dr. Kira

Allmann

Postdoctoral Researcher at the University of Oxford

This conversation was recorded on Jul 13th 2021 and has been edited for length and clarity.

Lawrence - Welcome! Today we have the pleasure of talking with

Dr. Kira Allmann.

Kira

is a post-doctoral research fellow in Media, Law and Policy at the

Oxford Center for Socio-Legal Studies. Her research focuses on digital inequality, how the digitalization

of our everyday lives is leaving people behind and what are the

communities doing to resist and reimagine our digital futures at a

local grassroots level.

Kira, welcome to Critical Future Tech.

Kira - Thank you so much for having me. It's a pleasure to be here.

L I'm really happy to

have you for this tenth edition of Critical Future, which is a

project that aims to ignite critical thought towards technology's

impact in our lives.

I am passionate about the positive impact of technology, but also

I'm equally obsessed with the potential negative side effects that

it can bring, right? And you are someone that has clearly a lot of

interest in understanding and reducing digital marginalization. And

I realized that when I read the

Digital Exclusion Report

that you did, for the Oxfordshire county libraries right?

Before we get into all the topics that I want to go through with

you, I want to just talk a little bit about what may be a digital

divide by going through the story that you have in that report.

For the listeners, the report starts with a small story.

"A man that approaches a staff member of a public library. And

the staff member is kind of swamped in customer help requests here

and there. That man asks for a phone charger. Not a power outlet,

right? A phone charger. And the staff member says they don't

provide those for customers at which point the man says that he's

actually homeless and he has no way of charging his phone. He's

asking for that help 'cause he wants to charge his phone for a

bit. So the staff member realizes that this isn't your regular

digital help request and ultimately they're able to find a charger

for that man, which allows him to charge his phone."

So you volunteered as a digital helper for that library, right? And

what I want to ask you is: was that the moment that made you become

interested or that made you sensitive towards this sort of digital

divide? Was that the first time or were you subject to that before

that?

K That's such an

interesting question, thank you for that. It actually was not the

precise moment that got me interested in the role that libraries

were playing in bridging the digital divide. It was actually,

remarkably, one of many such moments that I had experienced.

I started volunteering at the library in part, because I did have a

broad awareness of the digital divide in the UK. It was the focus of

the research that I was just starting actually at that time in my

postdoctoral research fellowship on digital inequality. And really,

I just kind of wanted to give back.

When I set out to volunteer in the library, I didn't actually have

any intention for it to turn into a research project or a

collaboration with the county council library at all. It was really

just something I wanted to do for the community. But it became

really apparent that from day one - and I unfortunately can't

remember the specific scenes I saw on day one - it became really

apparent that this was actually a really important site for

observing the lived experience of digital exclusion on the ground.

In talking with fellow digital helper volunteers, other people who

were doing the same kind of volunteering that I was doing, and also

the library staff, I also learned that it was just really difficult

for the library to keep track or document or collect data given how

thinly spread they were on the ground on the really vital work they

were doing to help people like the man that I described in the

opening scene.

So I thought I had access to the amazing resources of a great

university institution, if I could somehow kind of put those

resources toward helping the library, get a bit better data on the

work they were doing and to kind of spotlight what was happening on

the ground then that seemed like a really good use of those

university resources.

So that's actually how the project came about, through constant

conversation with the library staff members that I was working with

everyday.

But to return to your original question,only that was really just

one of many scenes that I observed as a digital helper in the

library. Certainly not necessarily the first or only one that made

me think differently about where we should be studying the digital

divide.

"The digital divide is actually a very complex concept that is very important because it has become a key contributor to inequality."

L Awesome. That intro showed that digital divide can be manifested in many ways. So I'm going to ask you, can you tell us what is the digital divide?

K Well actually it is a

little bit difficult to pinpoint a single definition of the digital

divide.

I think that when most people use the term in a kind of colloquial

everyday conversation, what people have in their minds is the gap

between people who have access to the internet and maybe internet

connected devices like computers and smartphones, and those who

don't have that access. That's kind of the simplistic "haves and

have nots" kind of dichotomy. That's the basic idea that a lot of

people have in their minds.

But the digital divide as you've rightly pointed out is a lot more

complex and nuanced than that and to call it "the" digital divide is

probably a little bit misleading, but we all do it, I do it as well.

There are actually quite a lot of intersecting overlapping

compounding divides that have a digital component to them.

Let me start by just quite simply explaining how scholars think

about the digital digital divide.

Scholars, basically, have stated that there are three levels of the

digital divide.

The first level being the one I just articulated, which is a divide

between those who have and don't have access to the internet.

The second level is more of a divide in skills and literacy. This is

basically saying you may have access to the internet, but you may

not actually be able to use those resources to their fullest

capacity because you just don't have the knowledge of how to use

them. And obviously there are many layers of skills and literacy

that might come into play on that level, the second level.

The third level is really on outcomes. How do you take your access

and your skills and literacy and turn them into meaningful, positive

outcomes in your life. Meaning maybe attaining greater educational

opportunities or greater economic gain.

Those three levels are kind of broadly what scholars talk about when

they talk about the divide, but even that is a little flattening at

times, because drawing those clear dividing lines between the levels

is often very difficult. They all intersect with one another and

affect one another in various ways. And of course, within each of

those levels, there are a lot of nuances and differentiations.

Also the experience of being digitally excluded is often compounded

by other forms of inequality. Things like linguistic inequality,

racial inequality, gender inequality, socioeconomic inequality. All

of these kinds of what we might call quite simplistically, offline

inequalities, compound and affect people's access to digital

resources like the internet and digital devices, but also how they

use them and what kinds of experiences they may have online, let's

say when they do get online.

So basically the digital divide is actually a very complex concept

that is very important because it has become a key contributor to

inequality. If you're interested in inequality, digital is a space

that we all need to be looking all the time. And to relegate it

actually to just the issue of internet access, for instance is

really kind of an oversimplification.

L Yeah, but that's

the most visible that you can go for. Especially since the pandemic

where everyone is remote there were a lot of cases in the U.S., in

Europe, places where you would think everyone has access to stable,

reliable internet, where that's not really the case.

And that is also one of the things that I read when researching some

of your work on rural areas and how they can be impacted and even

how they can overcome that with the example of the

community-led internet that has fiber optics, that is really an incredible story.

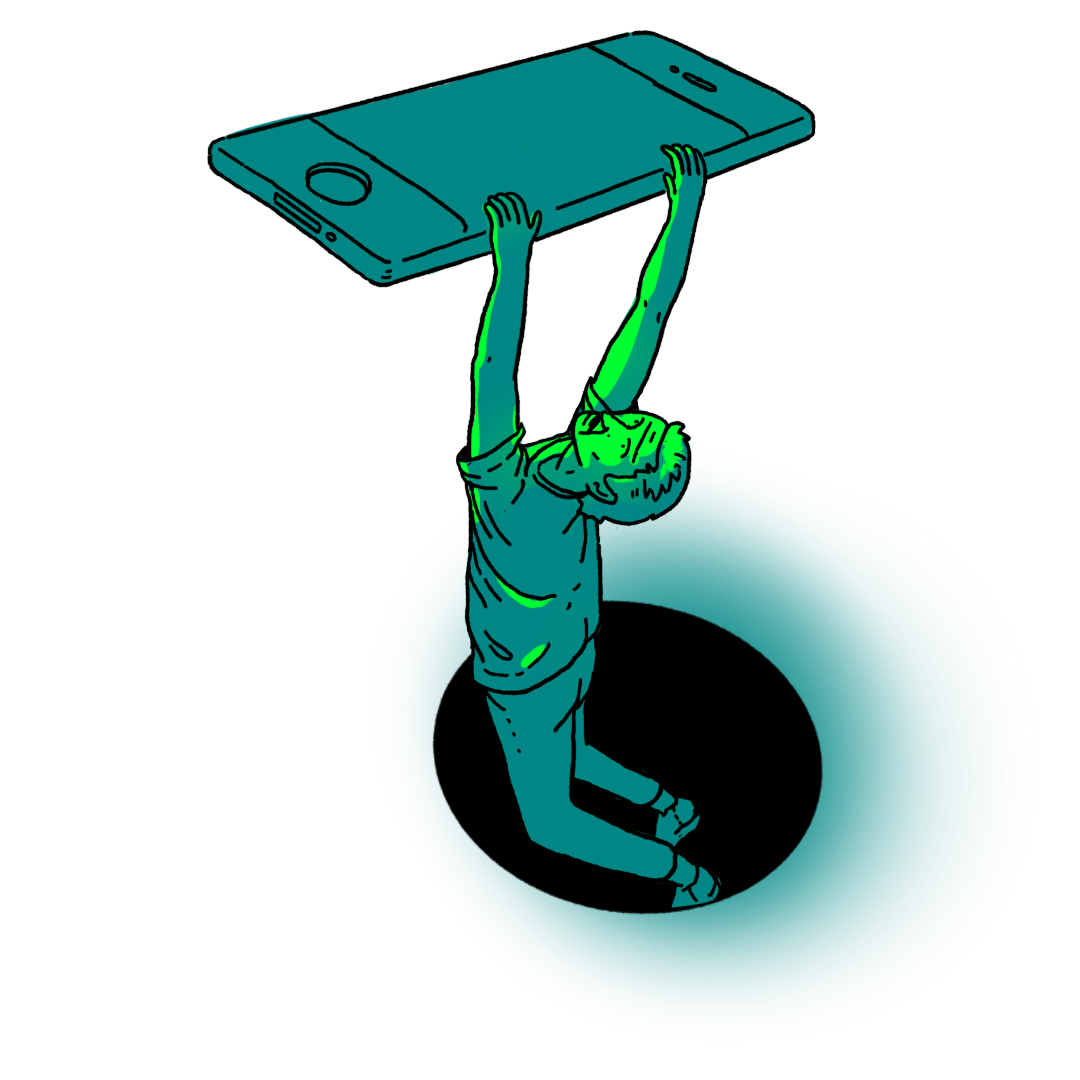

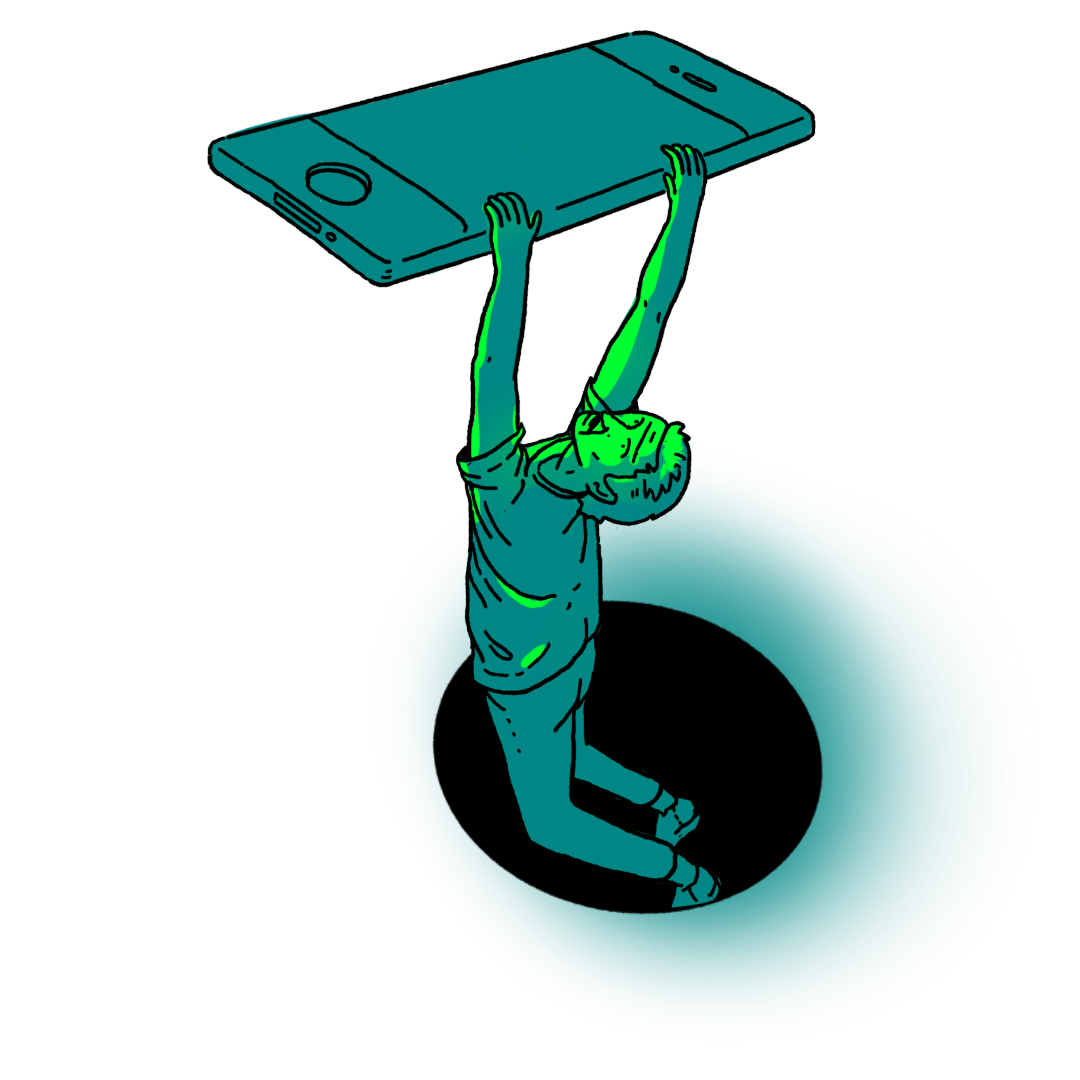

One thing that you mentioned when I first heard your talk was: I can

have a reliable internet connection, but because I don't have a high

income I don't have a Mac or I don't even have a computer. The only

thing that I have is my mom's smartphone.

That was very interesting because you believe that any youngster,

they are all literate. They can all work with Excel and do

spreadsheets and so on. And that's not really the case because of

that example that you gave.

That was for me, very interesting, because that is also a way of

divide, right? Again, you lack the hardware in this case to learn

and when you arrive to the marketplace, you're actually at a

disadvantage towards other people that have had the experience of

using say, you know, like a spreadsheet software or something like

that.

K Absolutely. And

actually that was something that I observed and that was told to me

in various interviews during the library project as well.

This issue of making assumptions, for instance, about what kind of

people will have access to what kinds of devices and you spotlighted

two key assumptions that often permeate expectations about the

digital divide.

One is that, basically, wealthier countries like European countries

and the United States don't have a digital divide problem because

the internet is ubiquitous. This is an assumption that is definitely

false as the pandemic has actually quite starkly revealed. And

another assumption is that young people are "digital natives" which

is a term that I think has been thoroughly critiqued and debunked by

other fantastic scholars and policymakers. But it's this idea that

basically young people kind of grow up around technology, so they

won't have any deficiencies in terms of digital literacy or access.

They'll be absolutely fluent in things like Excel like you

mentioned. They'll be fluent in smartphones, laptops, iPads,

everything.

The reality is that that just isn't true. What you see in a place

like public libraries, you see a lot of kids coming in, for

instance, who only have access to a smartphone. And when it comes

to, say, printing a document off that they need, for some reason,

maybe it's a payslip or something like that they really don't know

how to use even a keyboard and a mouse. And this was something I

heard from a lot of staff members that many of the students they

were dealing with were pretty flummoxed by the setup of a desktop

computer.

Even things like entering passwords, for instance, into a desktop

version of a platform like Gmail. Because a lot of us actually rely

on saved passwords and fingerprint ID and things like this on

smartphones, we don't retain a memory of what our passwords are and

when we suddenly have to enter it on a different platform, we get

locked out.

This is something you see a lot, especially among young people who

really only have single device literacy. That's something that I

tried to highlight a little bit in the library report, and I've

certainly brought it up in other forms as well around education and

digital inequality, because it tends to be kind of an invisible form

of digital inequality, largely because of those assumptions that

people make about certain demographic groups.

L The single device

literacy is an interesting term that also takes me to an idea which

is: the ecosystem of platforms and systems that you may interact

with — even just on a smartphone, if that's the only thing that you

got — is becoming more and more reduced.

For instance, in some countries, basically, Facebook is the

internet, you know? That's where you search, that's where you read

about things that others share. And the same ecosystem also exists

when you have packages where for X euros or pounds you will get free

access to Facebook, Instagram, Spotify, and a couple of other things

which have unlimited data so you are going to navigate that universe

almost exclusively, but not necessarily Wikipedia articles which

will use your data plan and then you will pay for that.

K Yeah, you're absolutely

right and the term that I usually apply to this phenomenon you're

describing, this kind of echo chamber phenomenon, is proprietary

literacy.

I basically mean that a lot of users who have limited access and

their access is through say a platform like Facebook, they become

very fluent in that platform and that company's toolkit basically,

but nothing really beyond that company's toolkit.

So another great example of this (well not great in the sense of

positive, it's just a good example to further illustrate the point)

is the prevalence of, for instance, Google Classroom in schools that

are under connected to the internet. Google has stepped in and a lot

of cases where schools can't afford or have limited connectivity for

various reasons to get devices and internet access.

Google has stepped in to help provide tools for students to be able

to get online and develop skills but usually these students then

only have access really to the Google suite of software and even

Google hardware like Google Chrome books. And what happens is those

students wind up growing up sort of really familiar with Google and

not that comfortable, not that fluent in other platforms, other

proprietary software and other kinds of hardware.

I've spoken to teachers in rural schools that are members of the

Google Classroom program who say that their students basically only

want to use Chromebooks and that when they have the opportunity to

get a device for the first time, what they want is that Google

device and it's not surprising because the devices that they have

access to in the school are exclusively Google products.

And so that is also, I would argue, a very limited form of digital

literacy. It's quite narrow this platform or proprietary literacy.

"If we want that imaginative space to be open, it's best to cultivate literacy in a wide range of platforms and devices and also to think about digital use less as an issue of consumption than it is an issue of participation."

L That is very

interesting. And I don't want to get into monopoly or antitrust

thoughts right now but my question is: if you have a device, say the

Google Chromebook, you use all of Google's apps and Chrome and so on

and all of that allows you to interact with society, right? So

you're able to pay your taxes to consult anything that you may want

and work and communicate and you're able to do that in that

ecosystem from Google, what's the problem with that?

What is the problem of being locked into that ecosystem? Or do you

see any problem with that, that person can live a digitally included

life?

K Arguably this

phenomenon is not new. Throughout the history of technology there

tend to be kind of dominant technologies that lots of people buy

into, they become more fluent and literate in the one that they

know. I remember for instance, I had a school that bought a lot of

Apple products when I was a kid and so I was a lot more comfortable

with Apple products because that was what I had.

It's not necessarily a new phenomenon but I think there is a reason

to be sort of just critical about it to kind of stick with that

theme. That's because we do live in a much more diverse digital

space than a monopolistic one. In fact, there are lots of different

products out there, there are lots of different companies competing

and arguably we want to live in an innovative dynamic future in

which new ideas are generated and there will be new companies and

new products and maybe even alternative ownership models for

platforms and things like that.

If we want that imaginative space to be open it's best, I think, to

cultivate literacy in a wide range of platforms and devices and also

to think about digital use less as an issue of consumption than it

is an issue of participation.

The thing about having sort of proprietary literacy as the

predominant form of literacy, especially for digitally excluded

communities - the communities that have limited access - what tends

to happen is that these users are really being cultivated as future

consumers of products. They're being motivated, they're nudged to

buy products that are produced by a particular company.

You may have various views on the usefulness or the value of that

socially but arguably it could potentially reduce competition in the

long run and it also views children, the student users of these

platforms as consumers first and citizens second.

I would suggest that that isn't really encouraging the kind of

diversity and dynamic thinking that we need in terms of building a

more inclusive digital future in the long run.

L Thank you. That's a

great answer and touches on something that I want to talk about a

little bit later, which is Critical Tech Literacy. We're hinting a

lot about people being critical of things, even though they are

great to be used like Apple and Google products. And by the way,

Apple is also another company that's very keen on having a foothold

on education.

So talking about digital divide: we understand that it's a complex

issue and it is manifested in different ways.

I am a technologist, I'm a software engineer. I build products

online for users around the world and I already know about some

things that can contribute to digital exclusion such as: it's

English only or it requires fast connections for you to connect so

if you can't go for that, then my product doesn't work for you and

I'm excluding you.

Those sorts of things are kind of known for the more attentive

technologists and so my question is: what are some things that can

hint at digital exclusion? Putting aside those obvious hurdles that

I just mentioned, what are things that I could be on the lookout for

or that maybe I'm not aware of as I'm building new digital products

that I can look for and anticipate and incorporate into my

solutions?

K Of course it's very

difficult to anticipate what a better kind of more inclusive build

will be without talking to users.

I'm an anthropologist so I always believe that the best way to get a

sense of what's actually happening on the ground in people's real

lives is to observe them in their everyday lives, doing ordinary

things. It tends to be very revealing. And this is slightly

different than arguing for something like user driven design which I

also think is a very important aspect of design development.

But what you're asking is: how do you undercut your own assumptions?

And that's very difficult because it's very hard for all of us to be

so self-aware that we can be conscious of our own assumptions that

we build into our technologies.

Usually the best way to do that is to step out of our own

perspective and occupy somebody else's perspective for a while.

I can give an example of this from a conversation I had with a

library staff member, actually in Oxfordshire libraries, who runs

tablet and smartphone sessions mostly for pensioners — for elderly

folks — in the community. He was saying there are all these symbols

that especially tablets and smartphones use to navigate around menus

that a lot of older folks just don't really understand. I mean they

can functionally touch things and they know that an application will

open if you touch this thing and things like that but there are

things that are just not intuitive to a certain generation.

For instance how on earth would you know that a little circle with a

line coming out of it is a magnifying glass, and that means

"search"? I tend to refer to this as the visual vernacular of

platforms or apps.

There are a lot of sorts of things that we have intuitively come to

understand as users of digital technology that aren't necessarily

universal. The sort of three lines that indicate a menu - you can

expand into a menu - a lot of people find that confusing. A lot of

older folks don't see a camera app icon as being a camera. It

doesn't look like a camera to them, it's like a circle inside a

square and they say things like "how is this a camera"?

"The issue is that digital inclusion isn't a switch that just gets turned on at some point and then it's always on. It's actually more of a process where people can fall in and out of being included over the course of their lifetimes."

L To be honest I

threw that question out there not expecting a bullet list of things.

The first thing is of course be aware that your users may have

special needs that your product doesn't account for. Of course

understand your users, understand for who you're doing the product

or the service that you're building. Talking with them is essential.

Right now you were talking about the icons and it's funny because

sometimes I'll be prototyping some interface and I'm like: "all

right I need a search icon here". So I go on this website that gives

me a lot of free and paid icons and I just type "search" and I have

a lot of magnifying glass icons, you know?

So there is this notion that like "that is a search icon", you know?

At least for web developers and designers and so on. If I say to my

designer colleague "put a search icon here", he's not going to put

anything else besides that. And it's interesting that some groups

may not realize that.

Do you think that that will come to an end at some point? We're

going to have a generation that has interacted so much with those

interfaces that at some point do you think this gap is going to

narrow itself because everyone is a bit more digital native to some

extent, or is new technology going to come up like VR or AR glasses

and then our generation, we're going to be like "whoa, I cannot

reason with this" [laughs]. Do you think that's going to be the

case?

K It's probably unlikely

to be totally eradicated. This problem is very unlikely to totally

go away and that's for a few reasons.

You highlighted one of them, which is that technology changes all

the time, very rapidly. And for a lot of us - especially those of us

who have been kind of consistently connected since let's say the

beginning of the digital age. - it's even hard for us to remember

when those transitions occurred: when certain icons morphed into

other icons and when something became the standard symbol for search

or when something became the standard symbol for save and that's

because that change happens gradually and happens frequently.

As long as you're constantly connected you might experience the

change and take it on board, but not necessarily note it. I think

that the issue is that digital inclusion isn't a switch that just

gets turned on at some point and then it's always on. It's actually

kind of more of a process and people can fall in and out of being

included over the course of their lifetimes as well.

That this is something that is very important for understanding why

the digital divide is unlikely to just kind of naturally close as a

function of sort of demographic shifts. As young people get older

they'll just remain digitally connected and included, and we're just

not going to have a digital divide anymore.

The reason that's unlikely to be the case is for the reasons that we

were discussing earlier that the digital divide is actually a

function of a lot of compound inequalities. For instance people may

be highly digitally connected when they're employed, but then when

they become pensioners they're on lower incomes. They may actually

be only living off of their state pension for instance and due to

that, they may decide "I actually don't need internet connectivity

for the next few months or the next year, because it's a bit

expensive and I'll just roll that back".

And then if you're offline for a year or two years the digital world

does move on in that time and when you come back online a lot of

things can be really confusing.

This is something we can see already. For instance people who leave

school at 16 (you can leave school at 16 in the U.K.) and then maybe

are in and out of employment for a few years and then get a job that

requires digital skills, let's say in their twenties, will often be

very behind in terms of digital literacy, because they just had that

gap of a few years when they weren't regularly connected or maybe

they only had a smartphone and they kind of really didn't do that

much on a laptop and all kinds of applications have changed.

For instance our regular Microsoft Word users, sometimes you get an

update on Word and you're like "where did everything go? I don't

know where anything is anymore". Just think of that on a much larger

scale: if you're a little disconnected for a few years due to

unemployment or lack of income or something like that - life stage

changes basically - that will continue to affect people basically as

long as inequality continues to affect society.

That's why the digital divide is unlikely to be really just purely a

demographic or a time problem, mainly because people fall in and out

of various levels of inclusion over the course of their lifetimes.

That's something that digital designers could certainly be aware of.

To return to your earlier question about what else designers can be

aware of. We talked about the visual digital world but one other

thing I wanted to mention was the importance of simplicity and how

many assumptions go into deciding what is simple for a user.

I know that a big thing in app design and development is intuitive

design: this idea that things should be as easy as possible for

users. But a lot of times what digitally fluent people like you or I

would assume is easy is actually very difficult for users who are

digitally excluded or digital novices — they're coming to devices

for the first time.

Even something like having to create a user account can create a

barrier for a user to use a particular platform or application or

requiring somebody to create an email before they can use your

platform or account adds an additional layer of complication to a

user who may potentially desperately need access to the platform

that you've built if it's for something like say banking or welfare.

It's very important to think about what simplicity is to a user and

not to you as a designer.

"Critical tech thinking is about applying a critical lens to technology. This is increasingly important because of the fact that the digital world that we encounter today is not a fair one."

L I could go on on

discussions that sometimes I have with designers or fellow front end

developers about "No, just put a tooltip that just shows up when you

hover on it" and I'm like, "yeah I like that you're saving space but

if they don't know they can hover that thing and that thing has some

info there and they are not used to your interface, your product,

then that doesn't exist and you're not helping them." There are so

many stories like that and I'm going to use this to move to Critical

Tech Literacy.

Thinking critically about technology as a whole regardless of

whether you're a technologist like a programmer or a researcher. We

all use technology nowadays, virtually it is everywhere, it is

eating everything so it is important that we think about it

critically. I'm going to read a quote from one of your slides that I

screenshot. I'm going to read that and then we can dive into it a

little bit.

"Critical Tech Literacy means cultivating skills to think

critically about how we engage with the life critical technologies

that have become essential to everyday life. It includes sometimes

taking a critical stance towards technologies that perpetuate or

create inequality and unfairness in society."

So, first I was like "wow, Critical Tech! that is the same name!

[laughs]" I went and researched it to understand what was out there

regarding this theme and I mainly found literature on how critical

it is for people to be literate in technology. In the sense of: you

need it to work, you need it to be competitive, to be productive.

But that's not really what you're saying in this sentence, right?

The floor is yours to expand on what you mean by Critical Tech

Literacy in this case.

K Critical Tech Literacy

is actually a term that I have alighted on that I've kind of started

using really only very recently actually in that

webinar

that you attended. And yeah, I am using it differently from the

literature that you described.

What I'm talking about is really kind of blending critical thinking

with digital literacy.

Digital literacy really deals with competencies: how can you use

technology and can you use it effectively for achieving your goals -

those outcomes that are part of the third level of the divide.

That's digital literacy. It's a nuanced concept but it's very widely

been adopted in policy circles.

Critical thinking is about applying a critical lens to technology. I

would argue that this is increasingly important because of the fact

that the digital world that we encounter today is not a fair one.

Especially in recent years, there's been a lot of excellent

scholarship and reporting on the ways in which bias is built into

technology, which should not be surprising because technology is a

social product.

Bias is built into so many things that we use in our everyday lives,

there's no reason we should assume that digital technology is any

different.

But still today, digital literacy is kind of approached - especially

in school curricula - as a set of competencies: "How do you deal

with digital technology? Are you able to perform certain tasks with

technology?" And in its sort of most critical form: "can you keep

yourself safe in the digital world?" These are the focuses basically

of digital literacy, especially at the school level.

I think that we really need to move more in the direction of

teaching kids to think critically about the technologies they use,

how the technologies are built, what biases have been built into

them and how to live balanced lives with technology.

Technology is pervasive and also largely built and marketed by

private companies that have an interest in cultivating consumers who

will continue to engage with those products in order to create value

for the company. What that means in the long run is that sometimes

that constant engagement isn't necessarily in the best interest of

the user.

How do we start thinking critically about the pervasiveness of

technology in our everyday lives?

That's really what I mean by Critical Tech Literacy. It's about

thinking critically about technology so that the next generation of

tech users and designers: how do we ensure that they're thinking

about the assumptions that are built into technology, about their

own positionality in relation to technology and how technology is a

social product?

These are all concepts that are very widespread in academia, and we

use all kinds of complicated language to talk about them but they're

concepts that can be translated into a digital literacy program for

all ages. They're not really that complicated in practice and so my

argument for Critical Tech Literacy is that we should really take

some of these very important conversations that are happening in the

academy and make them a lot more widespread.

"If we want the technology marketplace to be dynamic and increasingly fair then we need to prepare students of technology today to be thinking like that."

L And I'm a hundred

percent behind that as you may imagine by having invited you to talk

about it.

I feel that technologists are more and more aware, even though it

may not be as mainstream as we would like it to be but there are

things coming out in the mainstream: books like "Weapons Of Math Destruction" and even documentaries such as "The Social Dilemma" which explains in very simple terms how technology can be biased

and can be used against you. And so we should be aware and be

critical about what we're building.

One thing that is funny, that is maybe just my perception, but when

you put the word "critical", people instantly are like: "Wow, you're

going to do destructive criticism.. And what? You don't like

technology?" And that's not the thing. Actually, I love technology.

I work in that field and what I just don't want is to contribute to

things that are then going to have negative side effects for groups

that I may not even be aware that that is happening, right?

As technology becomes more and more pervasive, inevitably, it is

important we wonder what is going on and not just take it in a

passive manner.

My worry is that governments or schools or even your employers are

gonna say: " what's the concrete outcome for that?" How to use the

tool, how to navigate the web - that is understandable: you're

productive, you can get a better job.

But what is the advantage of being critical about technology? How

would you get buy-in from a company or from a government and explain

that we actually need Critical Tech Literacy on a more abstract

level, on a more existential level and not on a practical level? How

could you convince company's management teams or a government to

say: "we need more of this"?

K I think that there is

really a ground swell right now of increasing awareness as you said

of the issues related to how digital technologies can deepen certain

social inequalities and there's been a bit of a backlash against

that.

The debates that we've seen in Europe and the U.S. around data

management and privacy are kind of the tip of the iceberg and I

doubt that these issues are going to go away anytime soon. The

debates around things like Clearview AI, the scraping of personal

content without consent, what terms and conditions actually mean for

users, things like this. These are debates that are not going to go

away. Companies won't be able to dodge them, governments won't be

able to dodge them and the more awareness that people kind of

generally have, the more they will stay on the agenda.

Future technologies, whether they're built by companies or

governments or NGOs or individuals or whatever, are going to have to

design their platforms in fairer ways. That's the direction of

travel right now.

So it is actually very much in the interest of companies, government

and schools to think about who the next designers of technology are

likely to be. Undoubtedly kids in schools today are growing up kind

of with ambitious plans for what technology should look like in the

future, because a lot of them are heavy technology users, that's the

reality.

If we want the technology marketplace to be dynamic and increasingly

fair - I would argue that that's a good social goal in and of itself

- then we need to prepare students of technology today to be

thinking like that. We need to prepare them to be questioning their

own assumptions, to be thinking about living in balance with

technology so that they can build better products that enable users

to have more control over their data.

And actually, I would also argue that while it is a kind of abstract

esoteric concept, this idea of critical thinking about technology,

there are some really concrete aspects to this.

So for instance, that webinar that you attended (hosted by the

University of the Arts London) in the workshop component we asked participants about their level

of confidence, for instance with different digital skills. And a lot

of people, because this was a very digitally literate crowd, ranked

really highly on things like "I can produce a word document" and " I

can search the internet", "I can even discern quality information

from questionable information online", things like this.

But when it came to things like "I feel I have control over my

digital footprints (the data trail that I lead)", these kind of

trickier areas where people are feeling kind of insecure, the

confidence level went way down.

And this was just in a small group of participants in this workshop,

but these are very digitally fluent people. When it came to things

like, "I feel like I have control over my data", or "I feel like I

can switch off when I want to", these were things that people ranked

pretty low in terms of their confidence.

Those are things that going forward, people are going to want to

have more control over and they're going to want to do. That's what

Critical Tech Literacy is all about, and that is going to affect the

entire economy around technology. And so it's got to be of interest

to companies, governments, and schools, unquestionably.

"Critical in and of itself does not mean you're always criticizing technology. It really just means developing an awareness and a kind of constant practice of reflection about the role of technology in our personal lives and in society and how technology is shaped by social forces."

L And I would even

just add something which is: on a purely competitive aspect,

technology is first functional, right? I can write a document, I can

communicate with someone, I can find something that I'm looking for.

That's the functionality part of it. And we all love Google because

it's so great at delivering that functionality.

And as those needs are fulfilled by the services and the products

that we use and we become acquainted, we start looking maybe for a

sort of higher order need, which is: "I still want to retain some

control over more abstract, more higher level things such as my

privacy, how my data is shared.

So it's like a sort of Maslow pyramid where you have your functional

needs fulfilled and now you're moving towards those more abstract

needs that need to be fulfilled.

K Yeah, I think that's a

great addendum for sure and to echo something else you said as well,

I am not anti-technology either.

I love technology and I use Google and I have Apple products and I'm

also not against these companies just because they're companies. I

think you made the point earlier that it's quite common that people

hear the word critical and they think you mean criticism. And to be

fair, sometimes I do, sometimes I do mean criticism.

But critical in and of itself does not mean you're always

criticizing technology. It really just means developing an awareness

and a kind of constant practice of reflection about the role of

technology in our personal lives and in society and how technology

is shaped by social forces.

That is not value neutral, it has value. But it also isn't

inherently critical or anti-tech. And so I do think it is important

to constantly stress that it may lead to criticism when things go

badly or when biases lead to exclusions that harm people, then it is

deserving of criticism, but that isn't necessarily what critical

means.

L What we're going for is building the futures that we were promised in science fiction. The good science fiction, the utopian one, not the dystopian one, right?

K Yeah, exactly! It

really is about building better futures for society!

My ethical orientation sees those futures as being more equal and

fair and inclusive and just and so those are the values that I would

argue need to be built into our social products like technology.

It's an optimistic view actually. It's not a negative destructive

view.

L And on that note, thank you so much for being here with us. It was a super interesting conversation. Tell everyone where they cen keep in touch with you. Where they can follow you, your work and your research.

K Great! Thank you so

much again Lawrence for having me on the program, it's been an

absolute delight. I've really enjoyed the conversation myself.

If people would like to follow up and stay in touch and follow this

work you can go to my website which is

kiraallmann.com. You can follow

cherrysoupproductions.com

which is where we're doing a lot of the collaborative work and

collaborative development around Critical Tech Literacy resources.

There we will be putting up some free open resources on how you

could run workshops and sessions on Critical Tech Literacy over the

coming months.

And I'm also on social media. You can find me on

Twitter

and

Instagram, all at @kiraallmann, just my name so it's very easy.

L Great, everyone go

follow Kira. She publishes a lot of amazing research and great

articles.

Thank you so much and we'll keep in touch!

K Great! I look forward to it.

You can connect with Dr. Kira Allmann on Twitter, Instagram or via her website kiraallmann.com.

Worth Checking

"Atlas of AI" by Kate Crawford. Power, Politics, and the Planetary Costs of Artificial Intelligence

"Review: Why Facebook can never fix itself" - Reporters Sheera Frenkel and Cecilia Kang reveal Facebook's fundamental flaws through a detailed account of its years between two US elections.

"Why We Should End the Data Economy" by Carissa Véliz. The data economy depends on violating our right to privacy on a massive scale, collecting as much personal data as possible for profit.

If you've enjoyed this publication, consider subscribing to CFT's newsletter to get this content delivered to your inbox.